Designing the future of A/B testing + shaping AI strategy at Optimizely

Product design

B2B SaaS

Master's project sponsored by Optimizely

CLIENT

UW Master’s

project sponsored by Optimizely

TIMELINE

Feb - August 2025

TEAM

1 UX researcher

3 product designers

MY ROLE

Product design

UI + visual design

Design strategy

OVERVIEW

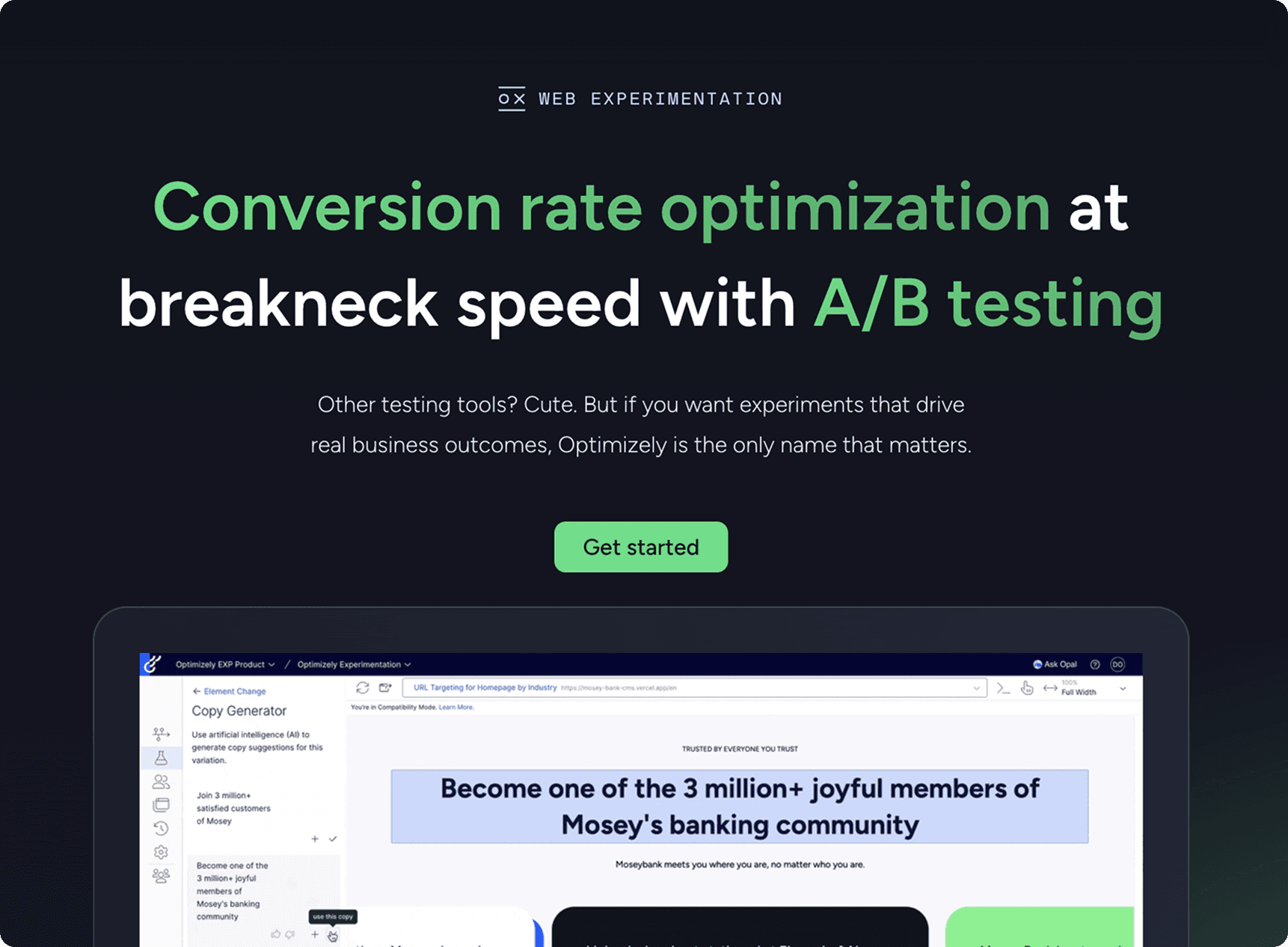

Context

Optimizely is a B2B SaaS startup that helps marketing teams improve performance through a suite of products designed to support their workflow. One such product is Web Experimentation (WebEx), an A/B testing platform where marketers can test digital experiences, measure uplift, and optimize websites for higher conversions.

With 1,300 customers, WebEx is a core offering, yet it has a 65% retention rate, the lowest of any Optimizely product. Many customers who purchase it struggle to get started.

1300+

WebEx customers - the most of any Optimizely product

65%

WebEx retention rate - the lowest of any Optimizely product

Our team was approached by two product managers at Optimizely to evaluate and improve the WebEx first-touch experience so that more customers can achieve early success with A/B testing and see the value of the platform quickly in an effort to increase retention.

OVERVIEW

Solution: WebEx 2.0

Through research, we learned that the WebEx first-touch experience was unintuitive and discouraging. New users lacked contextual guidance + feedback throughout the A/B test setup process, struggled to understand connections between test elements, couldn't make sense of the platform's language, and weren't sure what to do next after tests concluded.

We saw an opportunity to reimagine the WebEx platform by leveraging Opal - Optimizely's new enterprise AI technology - to provide users with personalized, in-context support throughout their experimentation journey. WebEx 2.0 makes it easy for anyone, regardless of experience level, to confidently set up and run impactful A/B tests that drive measurable results.

Meet Opal: the proactive AI collaborator

Users describe their test idea in plain language, and Opal generates a draft setup - flagging potential errors and utilizing knowledge of experimentation best practices to suggest improvements

Show, don’t tell: understand your test at a glance

A/B test components are displayed together in an interactive node layout paired with an editable website preview, so users can visualize how the pieces fit together, double-check their setup at a glance, and make edits all in one place

Launch confidently: checkpoints for every step

WebEx 2.0 features an intuitive experiment preview experience, a launch readiness checklist, a test duration estimator, and a shareable experiment summary, providing users with reassurance throughout test setup so that they feel supported + sure before pressing "launch"

Going beyond: turn one win into many

Opal suggests follow-up test ideas based on the results of concluded experiments, allowing users to easily iterate for continuous, data-driven impact

OVERVIEW

Impact

20+

Stakeholders influenced, leveraging our work to improve the WebEx platform

1

Shipped feature directly inspired by WebEx 2.0

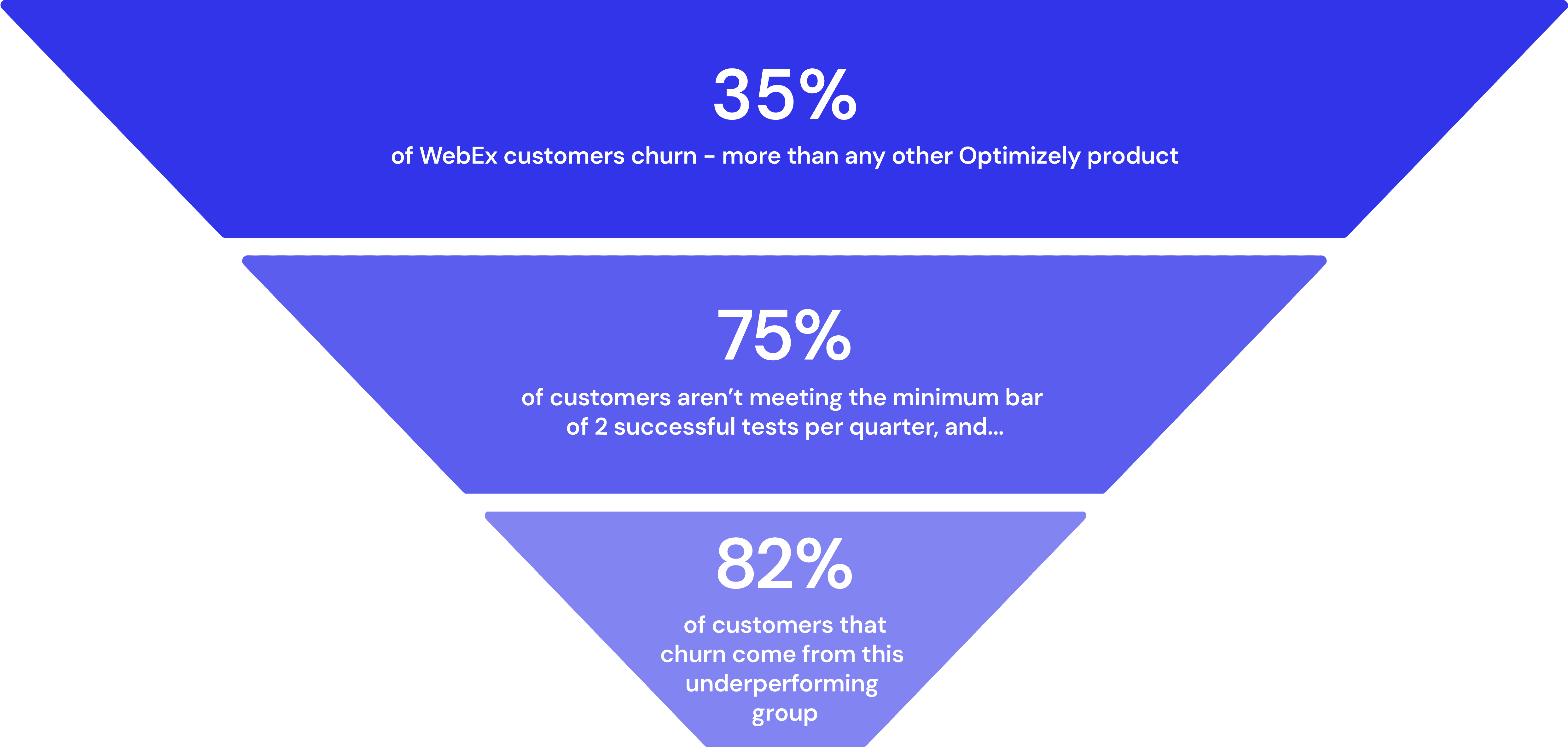

82%

Of churn addressed by helping customers build better tests

We successfully pitched WebEx 2.0 to 20+ stakeholders across Product, Engineering, and Research, securing buy-in for our AI-driven approach. Our work has since inspiring the shipped "Experiment QA Agent", a feature that allows users to double check the quality of their test setup with Opal and get suggestions for improvement before it goes live. Because 82% of WebEx churn comes from users who struggle to run successful tests, improving test quality through features like the QA agent has a high potential to influence customer retention.

OVERVIEW

My contribution

As a product designer, I drove the design direction of WebEx 2.0, collaborating cross-functionally with research and product management to ensure decisions were rooted in real user and business needs. I owned the end-to-end design of key features + flows and led the implementation of Optimizely's design system across the entire high-fidelity prototype.

Design strategy

I translated initial research insights and stakeholder priorities into a clear product vision that was used as a roadmap to guide decisions throughout the design phase of the project.

Rapid prototyping

I rapidly designed and iterated 10+ interactive prototypes at varying stages of fidelity, incorporating feedback from stakeholders, subject mater experts, and user testing to ensure resonance with our target audience, experimenters new to WebEx.

Visual + UI design

As the sole team member with past experience in visual design, I mentored teammates on design best practices and oversaw the implementation of Optimizely’s design system across the entire high fidelity prototype, driving correct and consistent usage.

User research

I played a key role across 4 rounds of user testing and 12 SME interviews - moderating sessions, recruiting participants, synthesizing data, and co-developing study plans

PROCESS

Project kickoff

To kickoff the project, my teammates and I met with our primary stakeholders, two PMs at Optimizely. During kickoff, we learned more about WebEx, its customers, and why the first-touch experience has become a key area of concern.

Our big takeaway: WebEx is a technical product, and while Optimizely offers personalized onboarding support for new customers at an added cost, many choose not to pay for this and there isn’t enough staff available for every customer to get dedicated support even if they wanted to. “Self-serve” customers who don’t pay for dedicated onboarding support with specialists struggle to get started with the platform and churn when it’s time to renew their contract.

Overall…

“My ultimate goal is to never have another customer not get started.”

-Britt Hall, Vice President of Product, Digital Optimization

PROCESS

Research

Methodologies at a glance

Desk research

📖

Lit review, competitive analysis + heuristic eval

SME interviews

💬

With 3 WebEx onboarding experts

Usability study

👩💻

of the WebEx first-touch experience (n=7)

Desk research

After kickoff, my teammates and I dove into desk research. We conducted a literature review to understand SaaS onboarding best practices, a competitive analysis to compare WebEx onboarding with that of competitors in the B2B SaaS space, and a heuristic evaluation of the platform’s current self-serve first-touch experience.

SME interviews

Moving into the next phase of research, our main objective was to develop a deep understanding of the current WebEx first-touch experience for self-serve customers. In particular, we wanted to figure out which parts are especially painful so that we could get at the root of why self-serve customers struggle to run successful A/B tests and ultimately churn.

Our primary research question:

What are the early experiences of self-serve users on the WebEx platform?

But…my teammates and I had no knowledge of or experience with A/B testing ourselves - let alone the WebEx platform. To get up to speed on the fundamentals, we decided to interview three WebEx experts at Optimizely with hands-on experience helping new customers get started:

Value + Adoption Advisor

Lead Strategy Consultant

Engagement Manager

These experts emphasized four key concepts that new users need to understand to successfully set up and run their first A/B test with WebEx:

An article on the Optimizely marketing website on how to make an A/B test plan

Test design

Coming up with an experiment hypothesis and a solid plan for testing that hypothesis

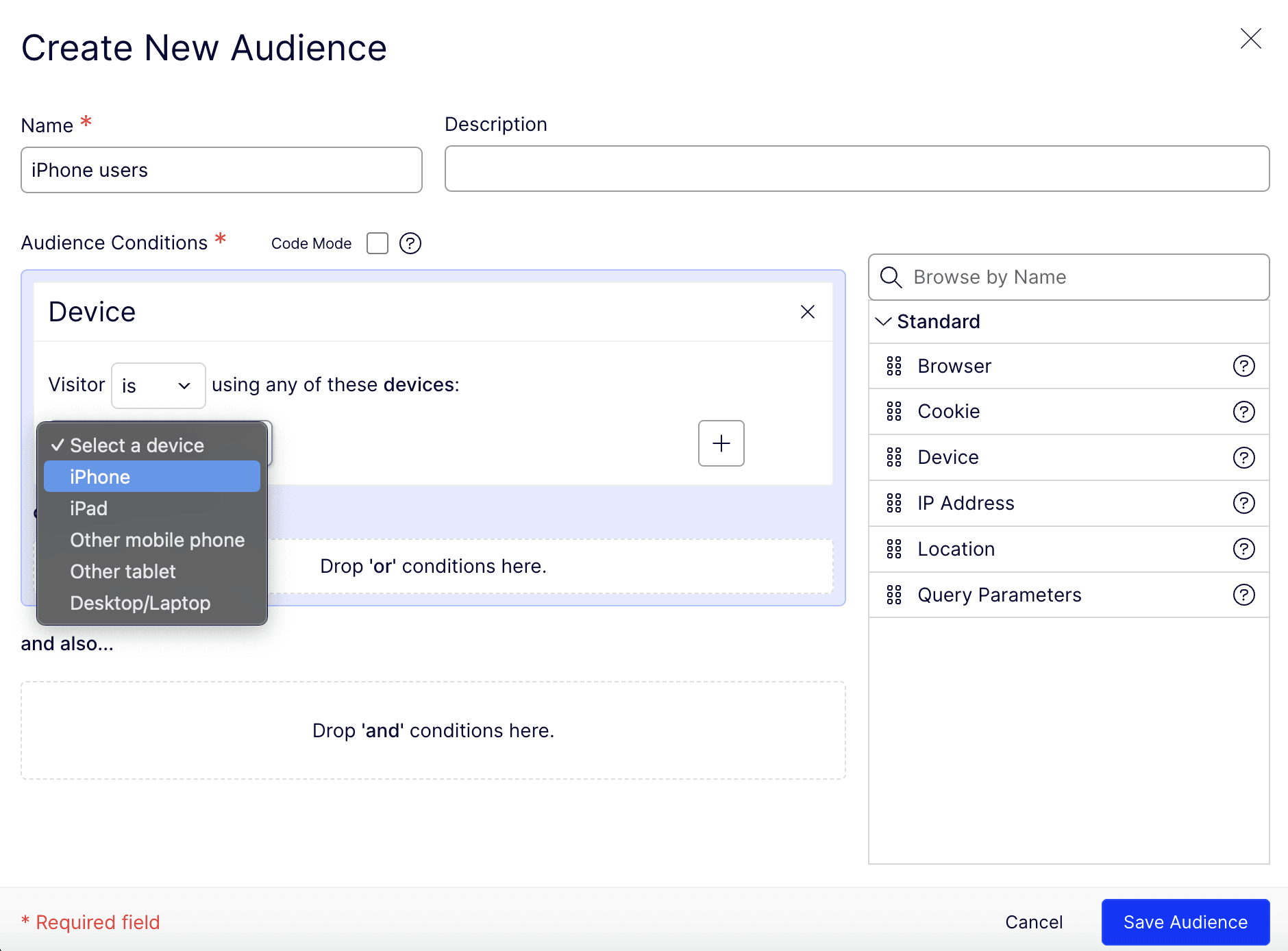

Audiences

Identifying a subset of site visitors to show the experiment to and setting that audience up using the platform

Creating a new audience on the WebEx platform

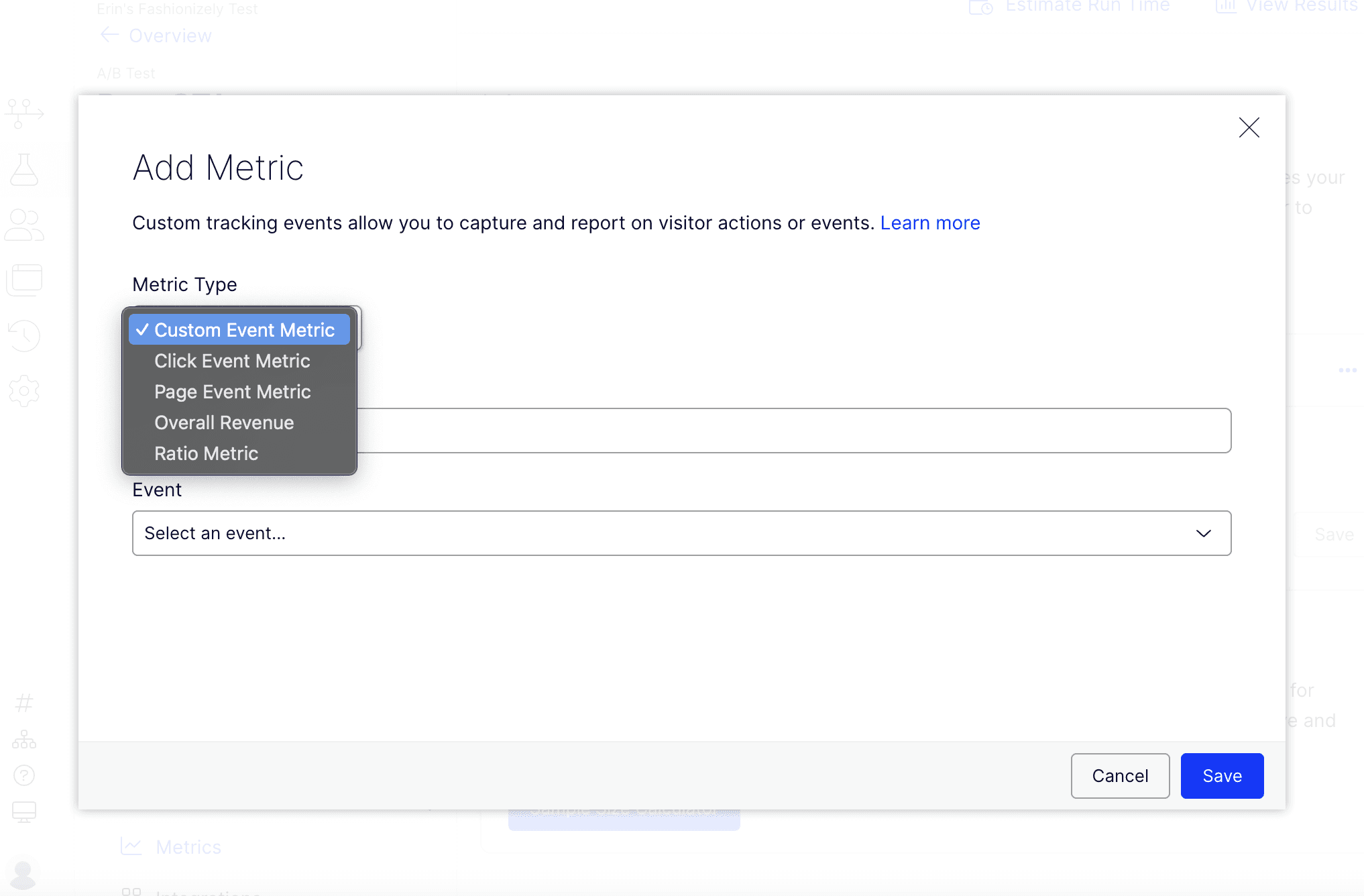

Adding a metric + corresponding event to an experiment on the WebEx platform

Events and metrics

Defining what the experiment is measuring, - such as a change in clicks, conversions, revenue, or bounce rate - determining what visitor action (event) triggers that metric to be tracked, and properly configuring events + metrics through the platform

Visual editor

Editing experiment variations directly via WebEx’s built-in visual editor

The visual editor allows users to make changes on their website to create experiment variations without coding

For our next research method - usability testing - we focused on evaluating how well the self-serve first-touch experience supports new users in understanding these core concepts.

Usability study

We conducted a usability study with 7 participants to simulate the WebEx first-touch experience + collect rich, real-time data and insights on how new users navigate the platform. Our participants were marketing or marketing-adjacent professionals with at least some A/B testing experience who had never used Optimizely.

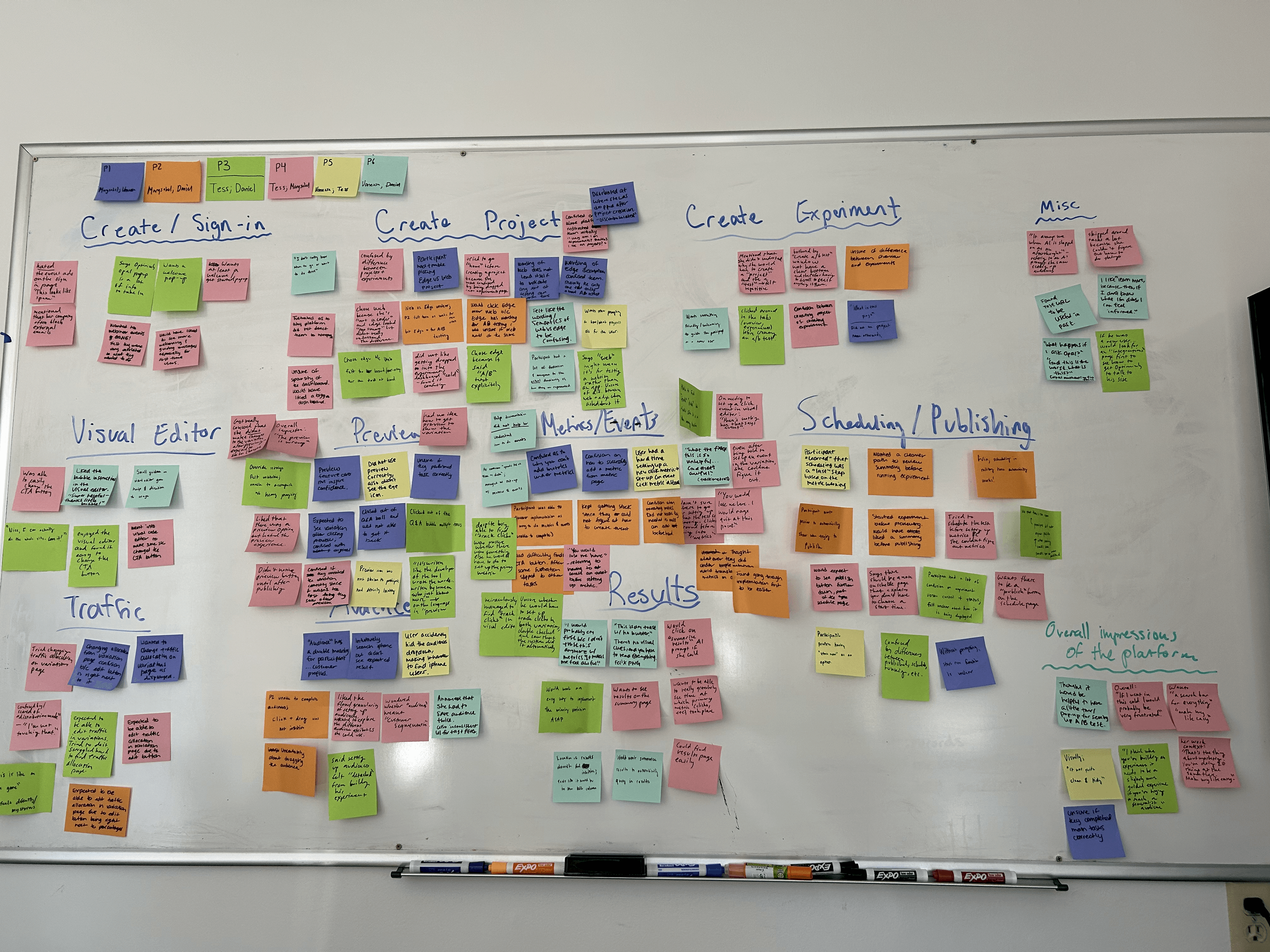

A snapshot of our data affinitization process - grouping observations by stage in the A/B test setup process

During the usability study, participants played the role of a marketer at an ecommerce company. We had each participant log into WebEx as a new user and set up an A/B test. After many rounds of affinity mapping + synthesis, we distilled our data into 3 key findings.

Key Findings

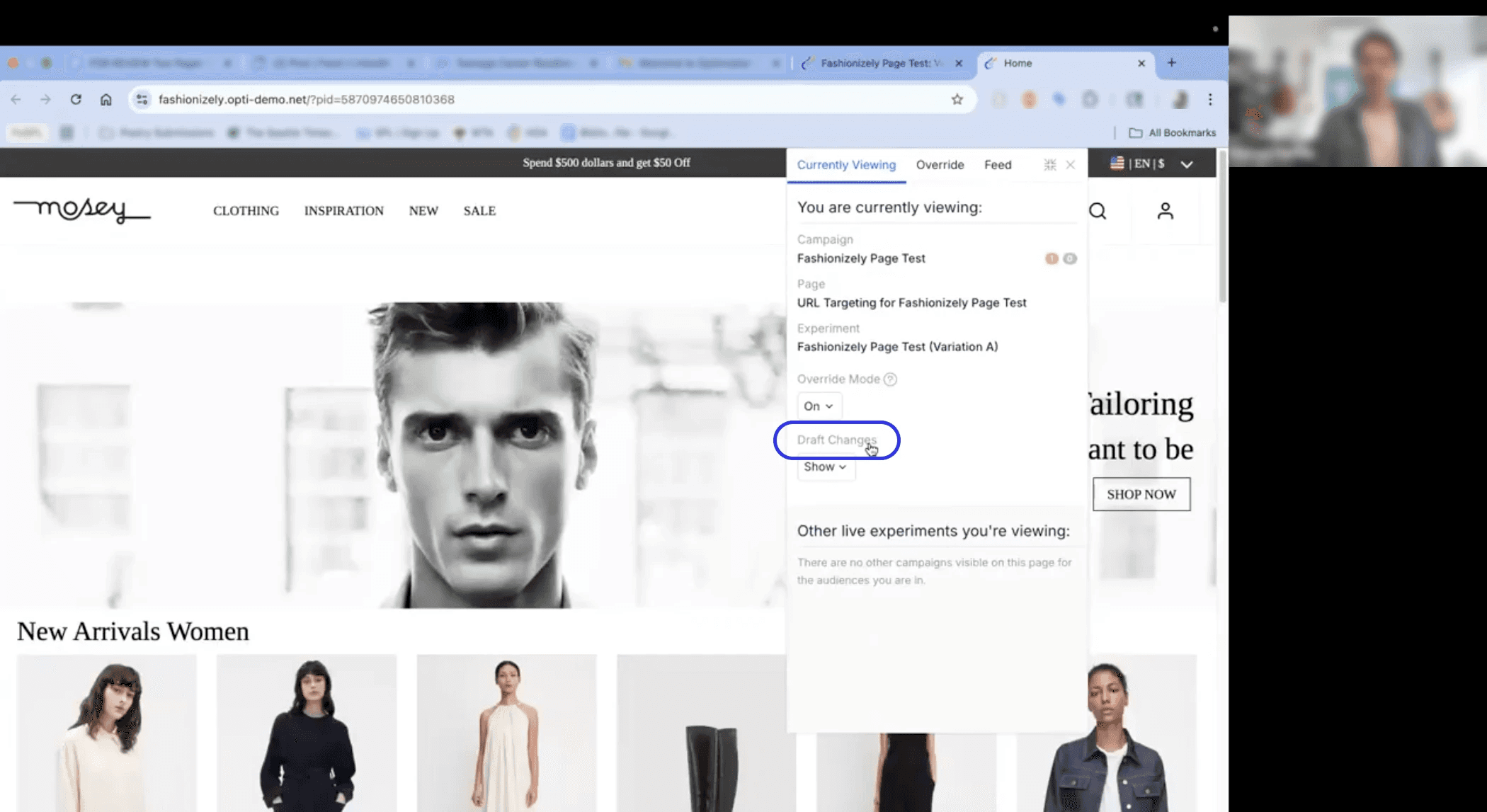

P3, confused by the platform's language, and therefore unable to successfully preview their experiment

Key information for first-time users is either missing or misaligned with their mental models.

As a result, they’re left unsure of what to do, where they are, or what terms mean.

“Some of this feels like the language is written for someone who just knows more...draft changes...I don't know what that means.” -P3

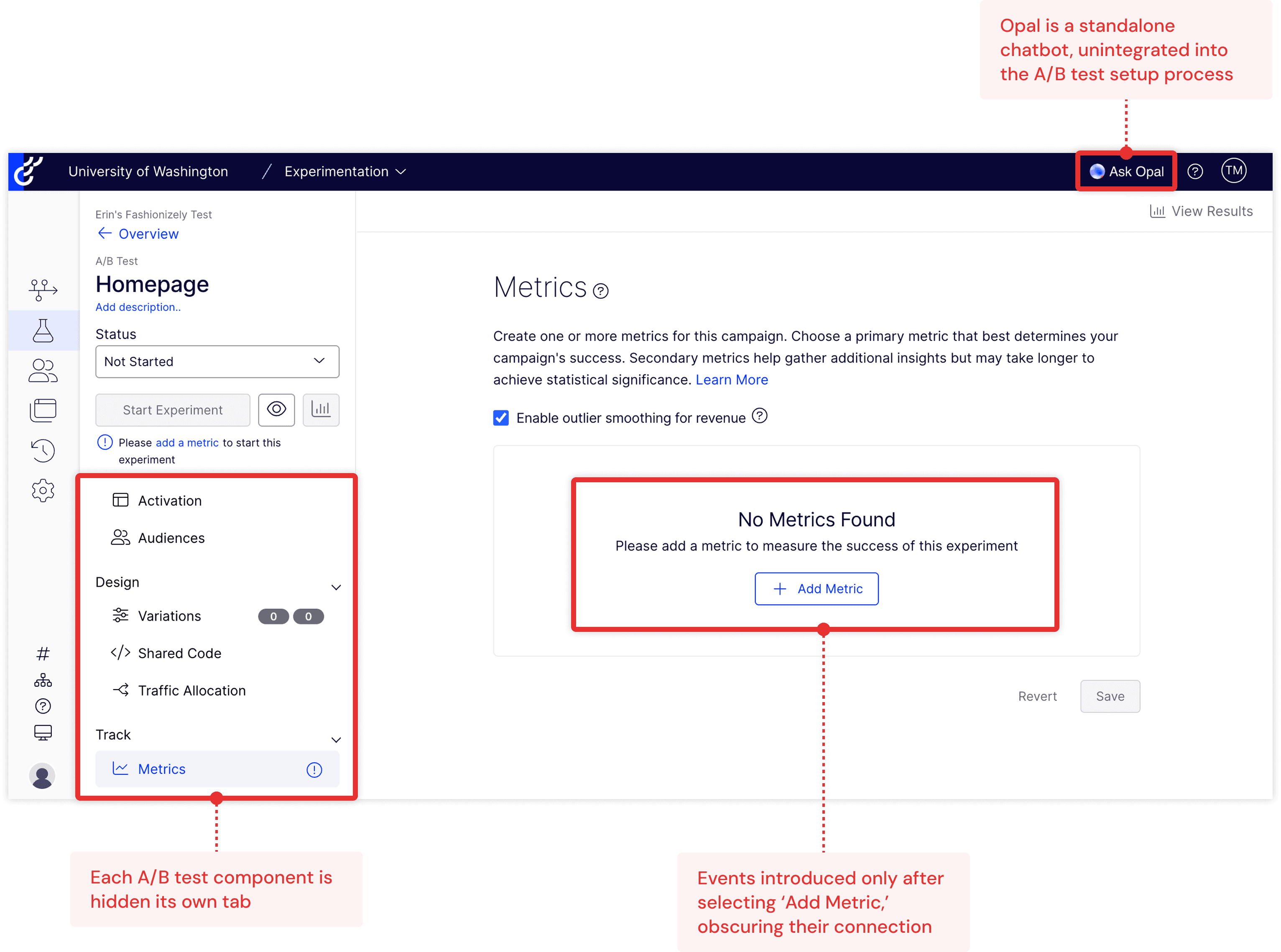

P5 getting stuck on metric + event setup. In order to track a metric, the user must set up a corresponding event first, but event setup happens in a separate part of the WebEx platform that users aren't directed to

Metric + event setup is the hardest part of the process.

6/7 participants couldn't figure out how to track metrics on their website through the platform, which meant they couldn't achieve their goal of setting up an A/B test.

“You would lose me here. I would rage quit at this point.” -P4

P4, attempting to preview their experiment variation and then questioning whether they had even set up the variation in the first place due to confusion around the preview feature

Users lose confidence as they move through experiment setup because the platform doesn’t provide guidance or feedback.

By the end, trust in their test + its results has diminished.

“I would probably cry first because I don’t trust [the results]. It makes me feel awful” -P6, when asked what they would do to view their test results

The big, overarching takeaway:

For self-serve users, onboarding to WebEx is a painful, unintuitive process that causes frustration, self-doubt, and distrust due to a lack of contextual guidance + feedback. High-friction moments - like metric and event setup - could cause abandonment of the platform all together.

Bonus finding: Opal, Optimizely's new AI chatbot

Midway through our research, Optimizely launched Opal, an AI chatbot, into their platform. While we hadn’t intended for our research to focus on Opal, during usability test sessions we observed how participants interacted with it in the context of first-touch onboarding and ultimately synthesized these observations into a "bonus" finding.

What we found: participants were intrigued by Opal, and wanted it to step in and assist them in high-friction or tedious moments during A/B test setup - but in its current form, as a standalone chatbot, they weren’t able to get help from it successfully.

(8:00) “I see there's Opal who is waiting to talk to me. I hate AI. I don't want to have to ask Opal anything. [laughs]”

(31:00) “What happens if I ask Opal?”

(44:00) “I could ask Opal.”

(70:00) “Maybe Opal will answer those questions.”

- P6, initial impression of Opal upon account creation. Later, uses Opal to try and answer their questions about Optimizely

PROCESS

Design strategy + ideation

Transitioning from research into design, my teammates and I crafted a how-might-we statement to serve as a jumping off point for ideation:

How might we reimagine the WebEx first-touch experience so that self-serve customers are able to seamlessly set up and run their first A/B test, use that initial success to build confidence, and as a result, see the value of the platform and continue experimenting?

Ideation + our chosen concept

A snapshot of our ideation + down-selection process - grouping similar ideas

All together, we came up with 50+ ideas and met as a group to affinitize + down-select based on which we felt best addressed our how-might-we statement, customer pain points discovered through research, and stakeholder priorities. Ultimately, we narrowed our ideas down to three high-level concepts:

User describes test idea + Opal generates setup

Users describe their A/B test idea in their own words or upload a pre-made test plan. Opal interprets, then generates the experiment setup for them, flagging potential errors and leveraging knowledge of best practices to suggest improvements

Node layout with interconnected test elements

All A/B test elements are displayed together as editable, interconnected nodes, helping to clarify relationships between them and allowing users to review their full setup at a glance.

A/B test setup as block coding

Block coding is a programming language for beginners that depicts text-based code as blocks that snap together like puzzle pieces. What if A/B test setup was like block coding? Representing test elements as blocks that fit together could help users who struggle to understand the logic of test setup when navigating the WebEx platform.

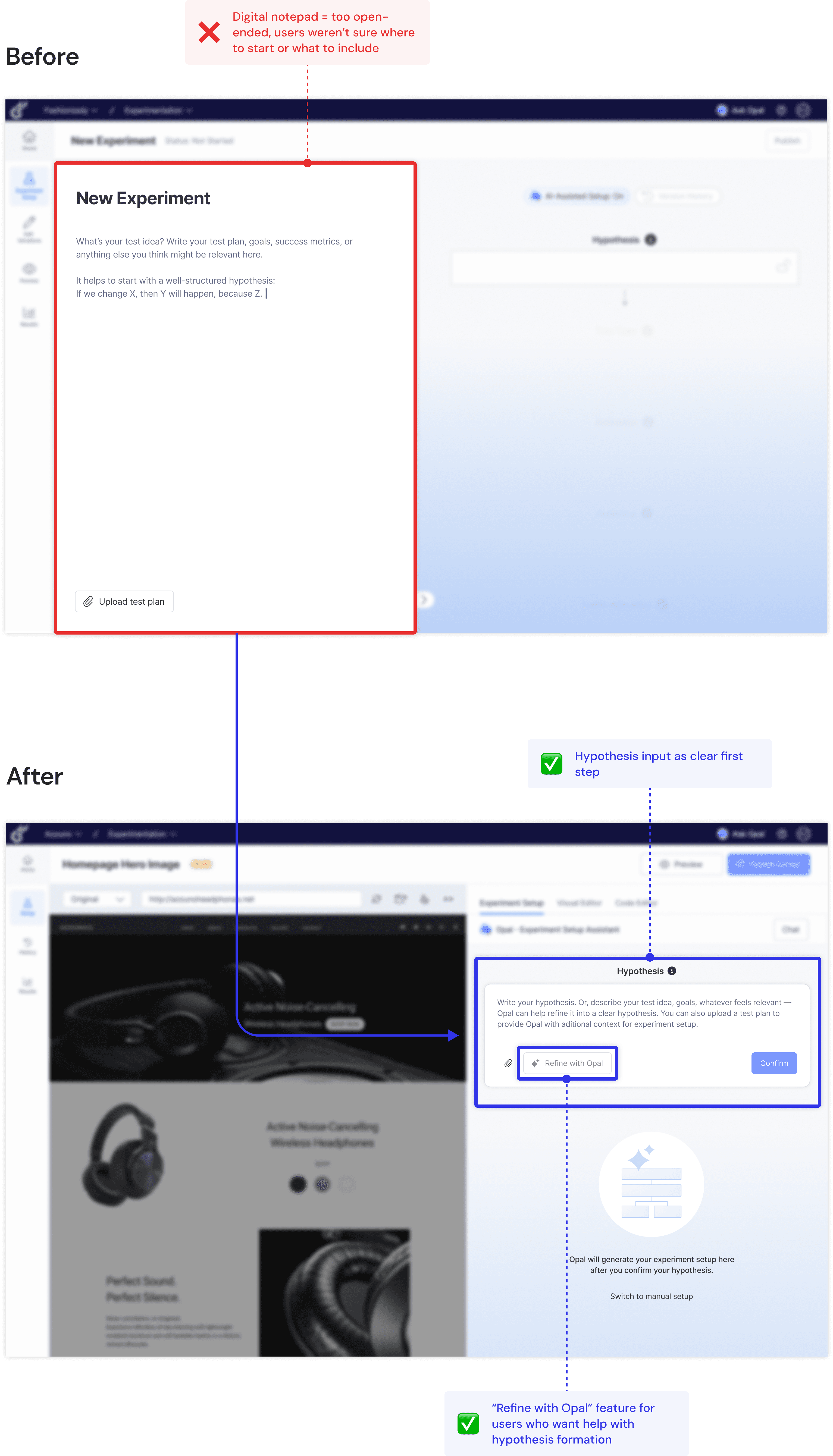

I advocated for combining all three concepts into one, envisioning an experiment setup experience where users describe their test idea in their own words on a digital “notepad” and Opal generates a node-based setup next to their input, with the block coding metaphor used to show the relationship between events and metrics.

To get my teammates on board, I quickly mocked up a mid-fi prototype of how I imagined the A/B test setup flow to look for this combined approach.

Before: the original WebEx A/B test setup screen

After: my mid-fi prototype of our concept for a reimagined A/B test setup experience

PROCESS

Prototyping + testing

Three 1-week design sprints

After presenting our concept to stakeholders and getting positive feedback, my team and I finished building out the interactive mid-fi prototype. It spanned the entire WebEx first-touch experience, which we defined as logging in for the first time as a new user up through launching an A/B test. I focused specifically on designing the Opal-assisted A/B test setup flow.

We were on a tight timeline, with a little less than a month left before final design hand-off, so we decided to break up the remaining time we had into three 1-week sprints of user testing + iteration:

User feedback + design iterations

The positive

01

Participants responded positively to the AI-generated A/B test setup flow. They easily identified (and appreciated!) contextual AI suggestions + error flagging

”It feels like, okay, AI is making me smarter and helping me execute faster. It's basically doing work for me, it feels like, so that's good. I would likely accept the suggestion.” -P1 (round 1, concept testing), Growth Marketing Manager

02

The node layout helped participants visualize their test + feel reassured that it was set up as intended

”If I think about how Optimizely does it now, there is no visualization of what the tests look like. And sometimes, I'm not so sure, actually, that I have all the steps properly selected or done. So this kind of visualization would help with that, to make sure that I have everything set up." -P3 (round 1, concept testing), Digital Marketing Manager

Room for improvement

01

Open-ended "notepad" did not provide enough structure

The digital “notepad”, which we envisioned as an open-ended brainstorming space for users to describe their test idea, was too open ended - participants weren’t sure what information to include and desired more structure. We assumed new users might feel intimidated by inputting a test hypothesis as the first step, but they actually gravitated toward the hypothesis node and wanted to type there instead of the notepad.

✅ Improved iteration: I removed the notepad and made hypothesis input the clear first step - while including a “refine with Opal” feature within the hypothesis node so that users still have the option to start with a rough idea and get help shaping it into a clear hypothesis.

02

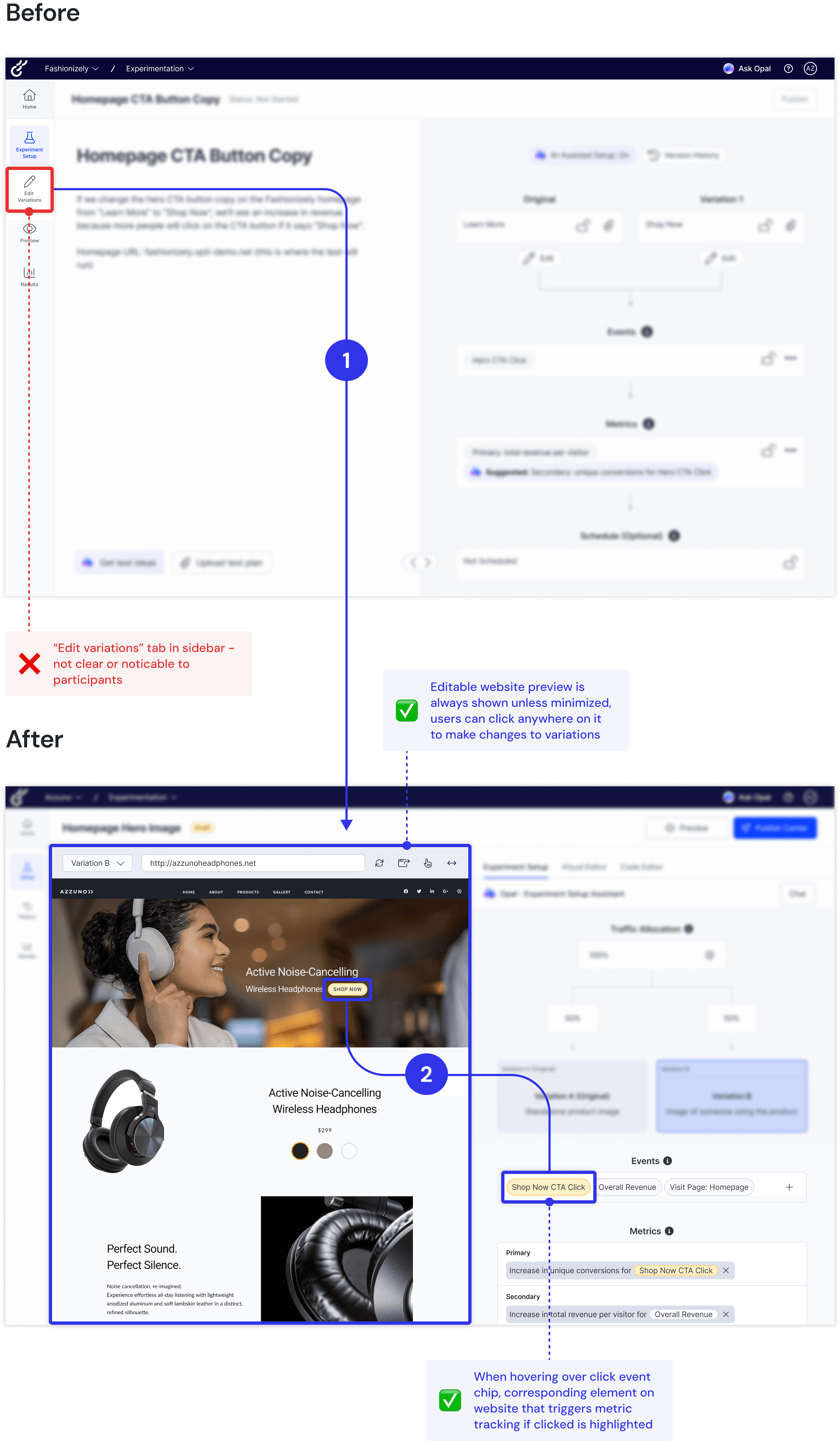

Unclear variation editing flow + missing metric context

Navigating from the “experiment setup” tab to the “edit variations” tab on the left side menu to edit experiment variations didn’t feel clear or natural to participants. Also, while the node layout + nested chips helped participants understand the relationship between events and metrics, they expressed a desire to see where the metric Opal had set up was being tracked on their website and edit if needed.

✅ Improved iteration:

To increase visual clarity, I added an editable website preview to the left side of the experiment setup screen, where the notepad used to be. Users can click anywhere on the website preview to edit variations.

When hovering over configured click event chips, the corresponding element on the editable website preview that triggers metric tracking when clicked is highlighted.

03

"Code required" error popup actions did not support collaboration with developers

In order for WebEx to track certain metrics, like revenue, a JavaScript snippet must be added to the head code of the website where the A/B test will run. The original WebEx platform doesn’t alert users when additional code is needed, so I added a clear error state + popup in our redesign. While participants noticed and understood the error, they emphasized that implementing additional code would be a major friction point for them as it typically involves reliance on a developer.

✅ Improved iteration: I updated the error popup to include links for sharing the code + instructions via multiple platforms to accommodate for different workflows, since there wasn’t consensus among our participants around a preferred channel for communication with developers.

04

Participants wanted contextually relevant AI assistance beyond test setup

In addition to AI assisted test setup, participants wanted help coming up with A/B test ideas for their website, but were skeptical that Opal would be able to provide impactful suggestions without proper context around their goals + customer base.

✅ Improved iteration: Knowing that Optimizely was already rolling out a "get test ideas" Opal-based feature, I considered how best to integrate and expand upon that feature within our redesign. Ultimately, I decided to add it to the A/B test results page, so that Opal could leverage data from the concluded experiment to provide relevant, iterative suggestions for further testing.

OUTCOME

Impact

We presented WebEx 2.0 to 20+ stakeholders at Optimizely across product, design, engineering and research and handed off a high-fidelity prototype using Optimizely’s design system. Our work has since inspiring the shipped "Experiment QA Agent", a feature that allows users to double check the quality of their test setup with Opal and get suggestions for improvement before it goes live. Because 82% of WebEx churn comes from users who struggle to run successful tests, improving test quality through features like the QA agent has a high potential to influence customer retention.

“I’ve been so impressed with this team. We’ve taken a ton of this work and already started implementing it which I think is the biggest sign of success. [Customers] can use our tool appropriately to build a really bad test, which is a pitfall that we run into a lot. So a lot of the work that this team did and recommendations that they gave us are already being folded into Opal. Today we are launching the first beta of the Optimizely Experiment QA Agent, which is the feature they talked about that gives warnings and updates and helps [the user] build a really good test. It is being built into Opal and going into our beta today.”

-Britt Hall, Vice President of Product, Digital Optimization

REFLECTION

What I learned

Designing for agility in startups

Optimizely is a fast moving startup with shifting priorities, and this project reflected that! Had I known Opal would be rolled out midway through our project, I likely would have approached the generative research phase a bit differently to focus more on AI use habits among Optimizely customers. That said, no project is without its twists and turns, and ultimately, by staying flexible and communicating frequently with stakeholders, we were able to deliver an impactful, research-backed solution aligned with Optimizely’s evolving business goals.

Navigating a technical problem space

Coming into this project, I had no experience with A/B testing or WebEx. To deliver a relevant solution, I quickly ramped up by reading help docs, building relationships with Optimizely employees who were experts in experimentation, and checking in frequently to validate my understanding of the platform. This process not only accelerated my technical learning but also gave me empathy for new WebEx users who face the same steep learning curve. In the end, by being curious, asking questions, and providing my fresh perspective, I helped stakeholders gain insight into user pain points.